Blogs

The Human Brain: Our source of inspiration for Artificial Intelligence Solutions

By Muralidhar Sridhar

VP – AI & Machine Learning, OVP & Analytics, PFT

November 21, 2019

Artificial Intelligence (AI) is fast gaining ground as the next big disruptor in the Media & Entertainment (M&E) industry. Over the last year, content creators across the globe have actively begun to adopt AI tools in order to enhance efficiencies, unlock revenue opportunities, and deliver interactive viewing experiences. The uptake has already started in sports production and OTT distribution, with entertainment following close behind. This evolution has largely been made possible by the emergence of industry-specific AI solutions.

It’s been a few years since content creators have had access to off-the-shelf AI solutions that focus on the identification of facets like faces, objects, actions, emotions, transcripts, etc. These have formed the building blocks for creating customized solutions that are tailor-made to serve the specific needs of content creators. The gap between AI technology and a solution that can actually solve M&E use cases is now getting bridged.

Let’s take promo creation as an example. Promo producers and editors spend significant time in selecting the right content clips to build effective promos. We studied this process carefully and realized that AI can play a major role in streamlining this process. We decided to craft a media recognition engine, CLEAR™ Vision Cloud to solve various use cases for content creators, including promo creation. However an engine that simply recognizes facets within the content was not good enough. In order to build context and deliver useful results, we needed to create a layer of ‘wisdom’ on top of recognition. And what better source of inspiration for wisdom could there be than the human brain?

Mapping AI to human cognition

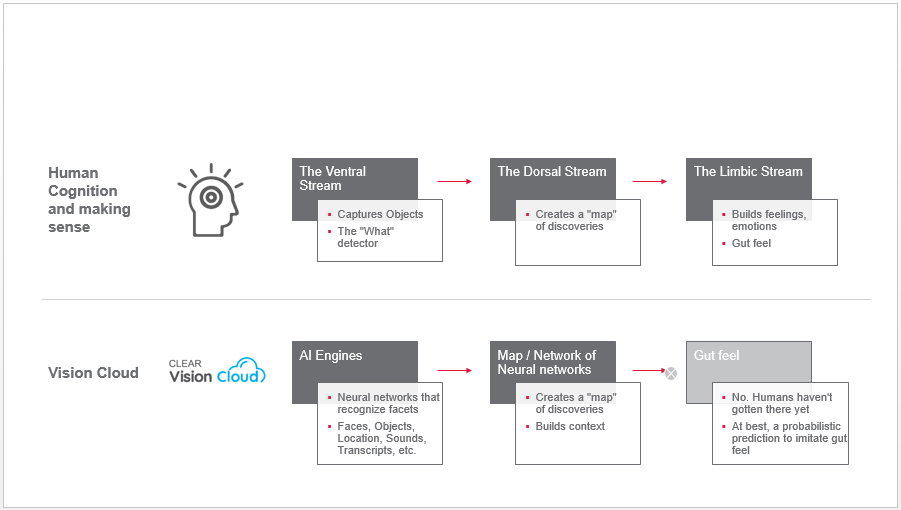

Among the many theories on how the human brain works, our focus was on the multi-stream theory. Here’s an overview:

- As sensory signals, visual and auditory, arrive at the visual cortex of the brain, they branch out in three streams – Ventral, Dorsal and Limbic.

- The Ventral stream performs the ‘what’ function – it recognizes objects and entities in visual and auditory inputs

- The Dorsal stream performs the ‘where’ function – it processes the object’s spatial location in a context and creates a ‘map’ of discoveries

- The Limbic stream performs the ‘emotion’ function and the ‘gut feel prediction’

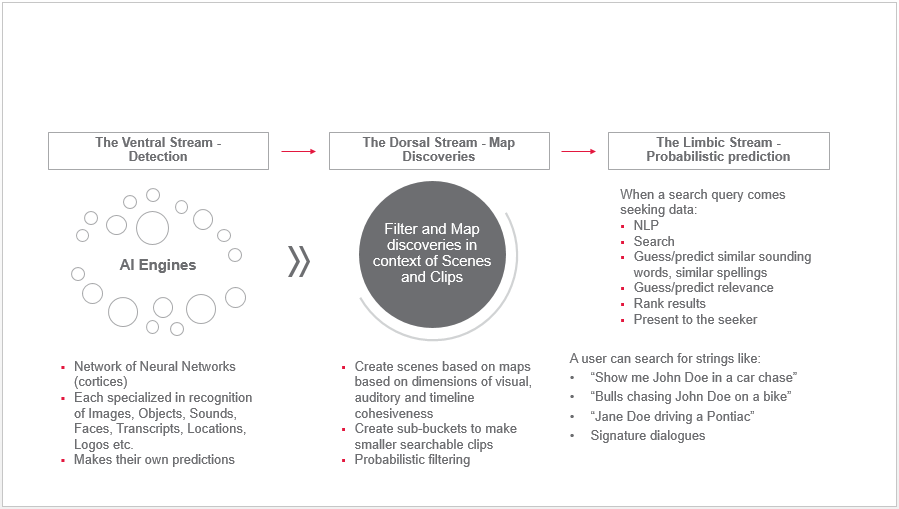

Researchers believe that these streams work together to help humans build context. So we replicated this concept in our AI engine, and got Neural Networks to behave like cortices of the human brain, recognizing various audio-video facets and then creating a map of these discoveries in a context. Here, context is a space-time combination that the engine identifies logically for each particular genre of content. In entertainment content, this will be a logical/meaningful scene with a set of clips that tell a small part of the bigger story. Finally, we added a probabilistic prediction dimension to guess what the user may be looking for even if it is not exactly the way a scene is described by the engine. The result was a media recognition engine that works well for both entertainment as well as sports content use cases.

Solving real-life use cases

For entertainment, the same content is recognized from different perspectives by a group of Neural Networks that identifies objects, people, transcripts, logos, etc. The context is created in a different layer as logical scenes and clips, into which these facets are processed with probabilistic filtering.

For sports, the main unit of the game forms the context - like a point in tennis, a ball delivery in cricket, or a pitch in baseball. The engine recognizes content, processes it, and makes sense of it in the context of this unit.

Using this approach, we were able to create what we call ‘Machine Wisdom’, a patent-pending technology that helps solve specific use cases for the M&E industry. These include:

- Content Discovery and Search: Vision Cloud automatically identifies logical scenes and searchable clips in content and provides accurate, actionable, contextual, and comprehensive sets of metadata. An editor can search for scenes that he/she is looking for using natural language. For example, the editor can simply search for strings like “Show me John Doe in a car chase”, “Bulls chasing John Doe on a bike”, “Jane Doe driving a Pontiac”; or even a signature dialogue.

- Sports: Vision Cloud automatically tracks sports action and creates highlights and packages in near real-time to engage with fans like never before. It helps sports producers cut down the time and effort involved in highlight creation by 60% and enables OTT platforms to deliver high levels of interactivity and personalization for consumers. The engine identifies storytelling graphics and events in footage along with game content to stitch together an end-to-end story in highlights, just like a human editor.

Today advances in AI and Machine Learning are opening up infinite possibilities for building innovative solutions to solve specific use cases. However, creativity and strategic thinking continue to differentiate humans from artificially intelligent entities. We have still not fully understood all the factors that make our intelligence unique, let alone been able to mimic them on machines. The good news is that solutions like Vision Cloud enable creative teams to leverage AI for automating repetitive tasks, achieving faster time-to-market, and freeing their personnel up to pursue far more creative pursuits.

WHAT'S NEXT